In our experience, traditional warehouses are mostly ideal for analyzing structured data from various systems and producing insights with known and relatively stable measurements. On the other hand, we feel a Hadoop-based platform is well suited to deal with semi structured and unstructured data, as well as when a data discovery process is needed. That isn’t to say that Hadoop can’t be used for structured data that is readily available in a raw format.

Business Intelligence (BI) :

BI enables an organization to gain insight into the performance of an enterprise by analyzing data generated by its business processes and information systems.

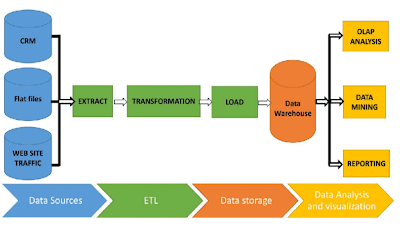

BI applies analytics to large amounts of data across the enterprise, which has typically been consolidated into an enterprise data warehouse to run analytical queries.

The output of BI can be surfaced to a

dashboard that allows managers to access and analyze the results and

potentially refine the analytic queries to further explore the data.

Tools used in Big Data Analytics

Processing Structured Data is something that your traditional database is already a structured data, by definition is easy to enter, store, query and analyze. It conforms nicely to a fixed schema model of neat columns and rows that can be manipulated by Structured Query Language (SQL) to establish relationships.

As such, using Hadoop to process semi-structured and unstructured data is raw, complex, and pours in from multiple sources such as emails, text documents, videos, photos, social media posts, Twitter feeds, sensors and click streams.

Storing, managing and analyzing massive volumes of semi-structured and unstructured data is what Hadoop was purpose-built to do. Hadoop as a Service provides a scalable solution to meet ever-increasing data storage and processing demands that the data warehouse can no longer handle. With its unlimited scale and on-demand access to compute and storage capacity. Hadoop as a Service is the perfect match for big data processing.

Keeping costs down is a concern for every business And traditional relational databases are certainly cost effective. If you are considering adding Hadoop to your data warehouse, it’s important to make sure that your company’s big data demands are genuine and that the potential benefits to be realized from implementing Hadoop will outweigh the costs.

Shorter time-to-insight necessitates interactive querying via the analysis of smaller data sets in near or real-time. And that’s a task that the data warehouse has been well equipped to handle. However, thanks to a powerful Hadoop processing engine called Spark, Hive,Hbase ..

No comments:

Post a Comment