# Program on WordCount.java

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount

{

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>

{

private Text word = new Text();

private final static IntWritable one = new IntWritable(1);

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException

{

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens())

{

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable>

{

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException

{

int sum = 0;

for (IntWritable val : values)

{

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args)

throws Exception

{

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

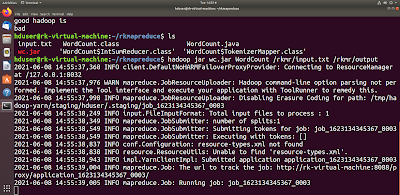

javac -classpath `hadoop classpath` -d /home/hduser/rkmapreduce/ WordCount.java

Step 3: create a jar file wc.jar in the directory rkmapreduce as shown

jar -cvf wc.jar -C /home/hduser/rkmapreduce/ .

Step 4: create an input file input.txt that we need to check the wordcount of it as shown

Step 5: Now place the created input file input.txt into hadoop cluster by creating a directory rkmr in it as shown

hdfs dfs -put /home/hduser/rkmapreduce/input.txt /rkmr

Now we can check the content of the file rkmr/input.txt using the command

hdfs dfs -cat /rkmr/input.txt

Step 6: Now run the command hadoop jar to WordCount the file as shown

hadoop jar wc.jar WordCount /rkmr/input.txt /rkmr/output

Step 7: Now we can check the output of wordcount program using the following command

hdfs dfs -lsr /rkmr

hdfs dfs -cat /rkmr/output/part*